Hi there! I completed my CS Ph.D. (Dec 2025) at Stony Brook University, advised by Prof. Arie Kaufman. Before that, I spent 3 years at Lehigh University for my CS B.S. (May 2020), where I worked on protein volume classification with Prof. Brian Chen.

I have done internships at

- ByteDance Seed in Spring 2025, working on video generation with Peng Wang, Yichun Shi, and Ceyuan Yang.

- Video group at Adobe Research in Summer 2024, working on video generation with Yang Zhou and Prof. Feng Liu.

- 3D group at Adobe Research in Summer 2023, working with Xin Sun and Hao Tan.

I am broadly interested in visual generative models (3D, video), large-scale training, and Reinforcment Learning (RL). My works advance Foundation Model capabilities (data, rewards, long sequence modeling) across lifecycle stages (pre-training, post-training, inference).

News

06/15/2025 Attended CVPR 2025 in Nashville, TN.

03/24/2025 Started internship at ByteDance Seed, working on video generation.

12/10/2024 Attended NeurIPS 2024 in Vancouver, BC, Canada.

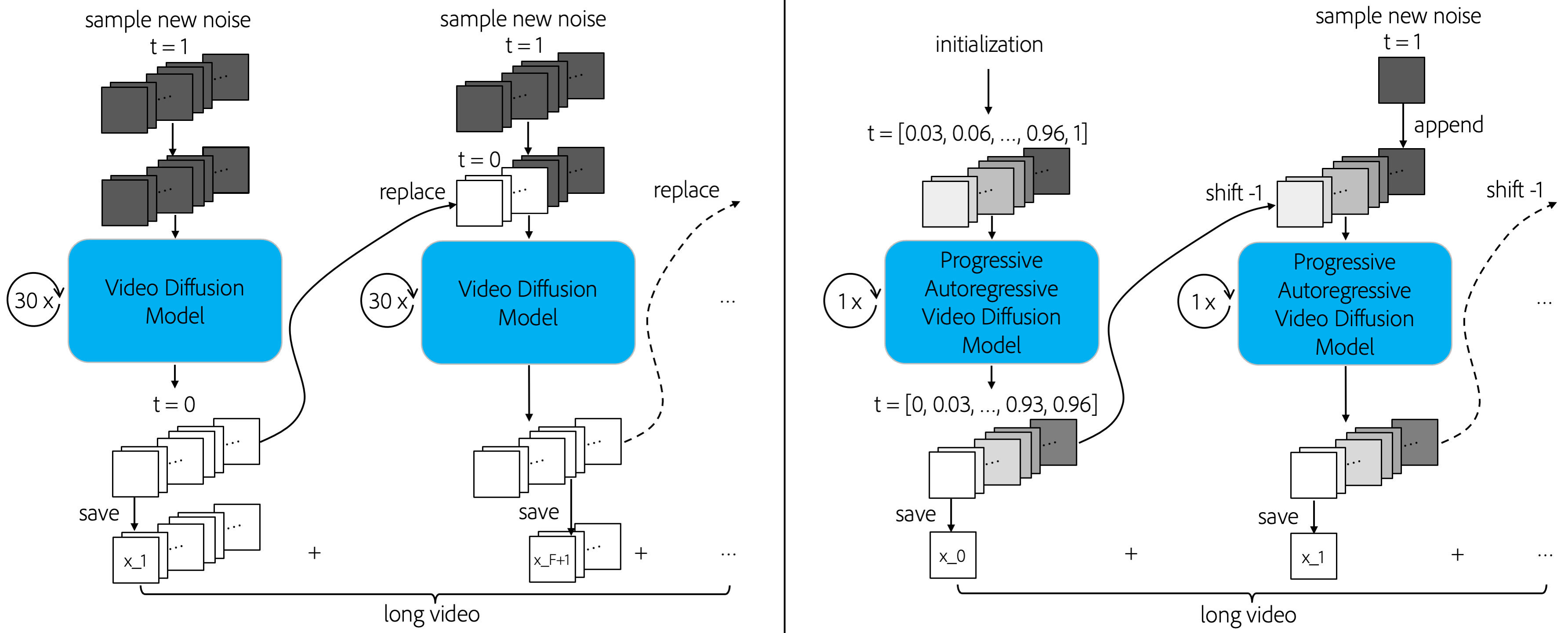

10/10/2024 Progressive Autoregressive Video Diffusion Models is released on arXiv. Proud to build the first general-purpose video diffusion model for 60-second long video generation.

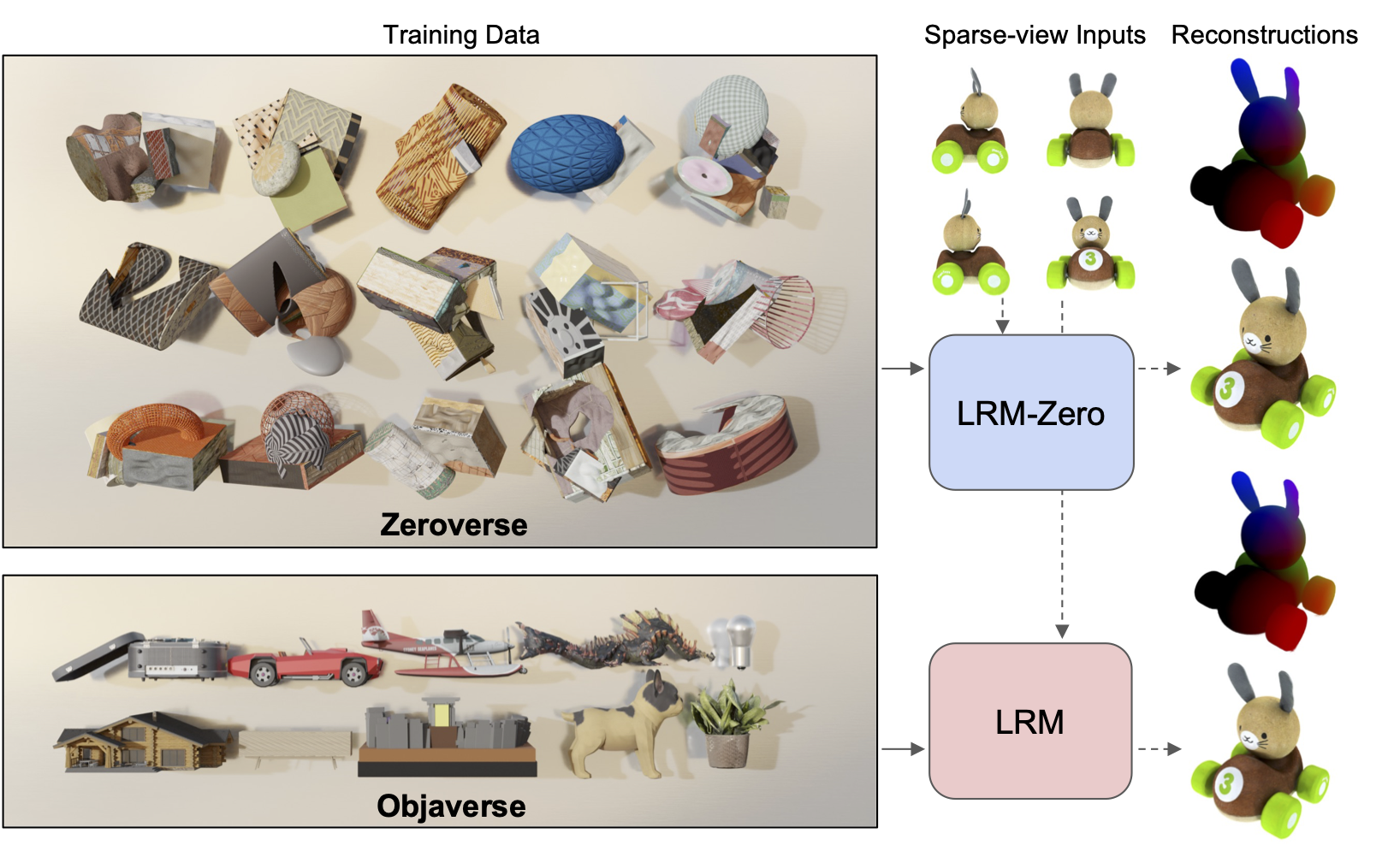

09/25/2024 LRM-Zero is accepted to NeurIPS 2024.

06/17/2024 Attended CVPR 2024 in Seattle, WA. I presented Carve3D and was glad to see LRM-Zero being featured in two workshop invited talks (3DFM and SyntaGen) by Hao Tan and Nathan Carr.

06/13/2024 LRM-Zero is released on arXiv. First time working on large-scale pre-training and data generation, and it was a blast!

05/28/2024 Started my second internship at Adobe Research, this time working on video generation.

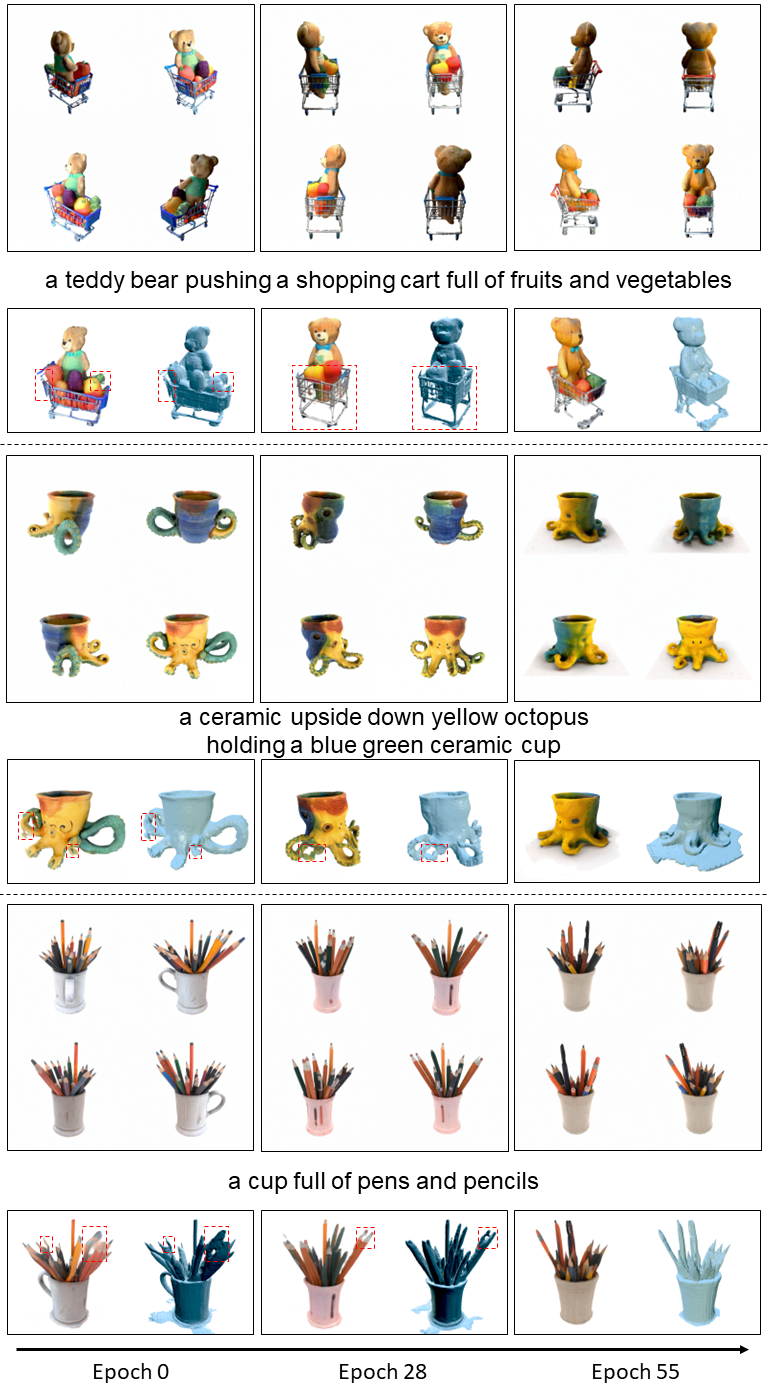

02/26/2024 Carve3D is accepted to CVPR 2024.

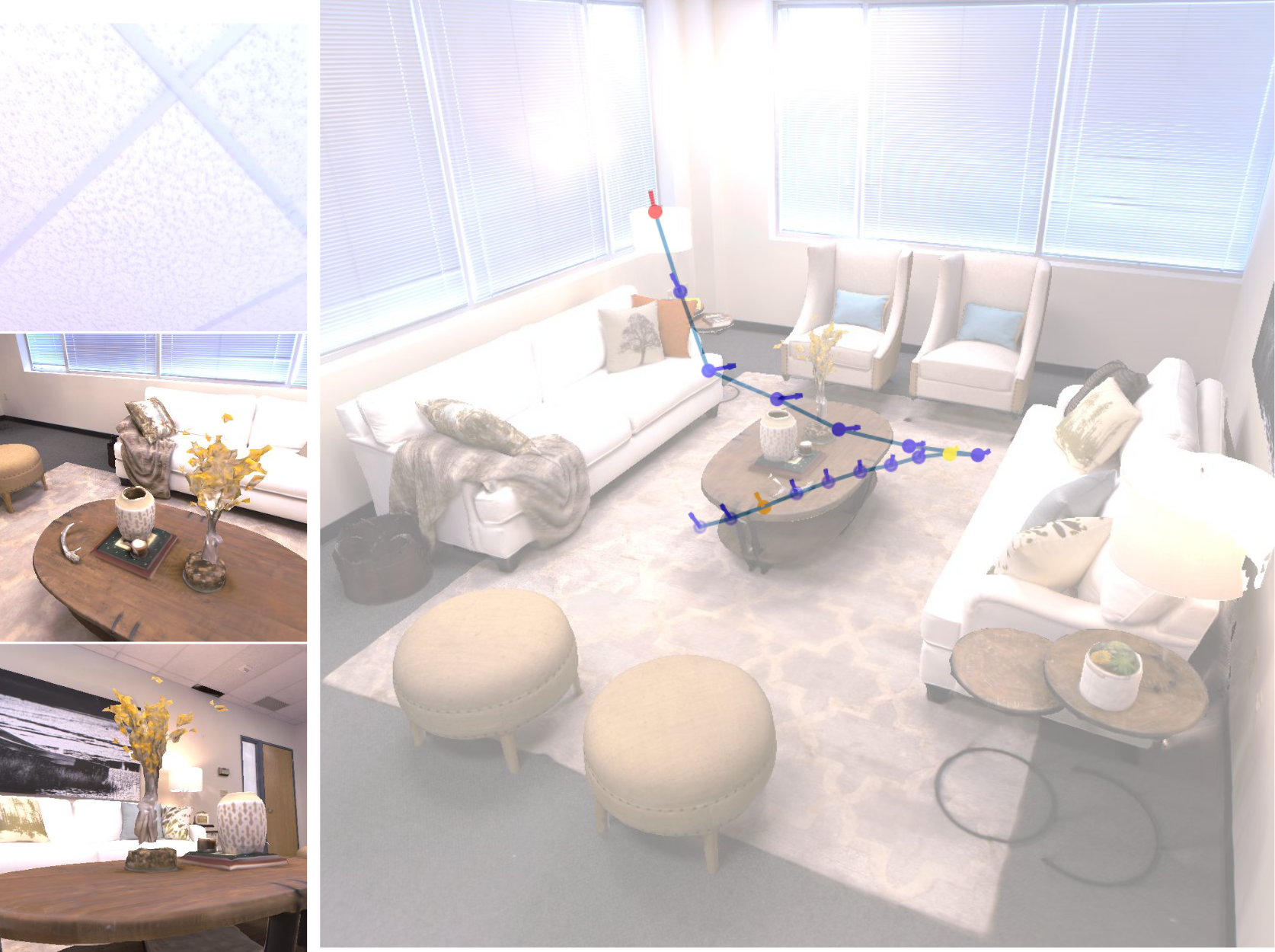

07/17/2023 GAIT is accepted to ICCV 2023.

06/19/2023 Started my internship at Adobe Research.

Publications

Progressive Autoregressive Video Diffusion Models

Desai Xie, Zhan Xu, Yicong Hong, Hao Tan, Difan Liu, Feng Liu, Arie Kaufman, Yang Zhou

CVPR 2025 CVEU Workshop

Project Paper Code

LRM-Zero: Training Large Reconstruction Models with Synthesized Data

Desai Xie, Sai Bi, Zhixin Shu, Kai Zhang, Zexiang Xu, Yi Zhou, Sören Pirk, Arie Kaufman, Xin Sun, Hao Tan

NeurIPS 2024

Project Paper Code

Carve3D: Improving Multi-view Reconstruction Consistency for Diffusion Models with RL Finetuning

Desai Xie, Jiahao Li, Hao Tan, Xin Sun, Zhixin Shu, Yi Zhou, Sai Bi, Sören Pirk, Arie E. Kaufman

Conference on Computer Vision and Pattern Recognition (CVPR), 2024

Project Paper Code

GAIT: Generating Aesthetic Indoor Tours with Deep Reinforcement Learning

Desai Xie, Ping Hu, Xin Sun, Sören Pirk, Jianming Zhang, Radomír Měch, Arie E. Kaufman

International Conference on Computer Vision (ICCV), 2023

Project Paper Code

Misc

A fun fact about my name is that De (德) and Sai (赛) means demoncracy and science in Chinese (Wikipedia).

I love training my “Catificial” Intelligence/CatGPT🐈 agent, Purrari, using a blend of supervised learning (instruction finetuning) and RL (treats as positive reward). She understands many words in both English and Mandarin and has mastered numerous tricks. Currently, she is advancing her communication skills through talking buttons. For more cute cat pics and videos, please visit her instagram, lovingly maintained by her mom.